If the public outcry against fake news wasn't enough to destabilise and imbue deep mistrust of the media, business and our democratic systems, there are now deepfakes.

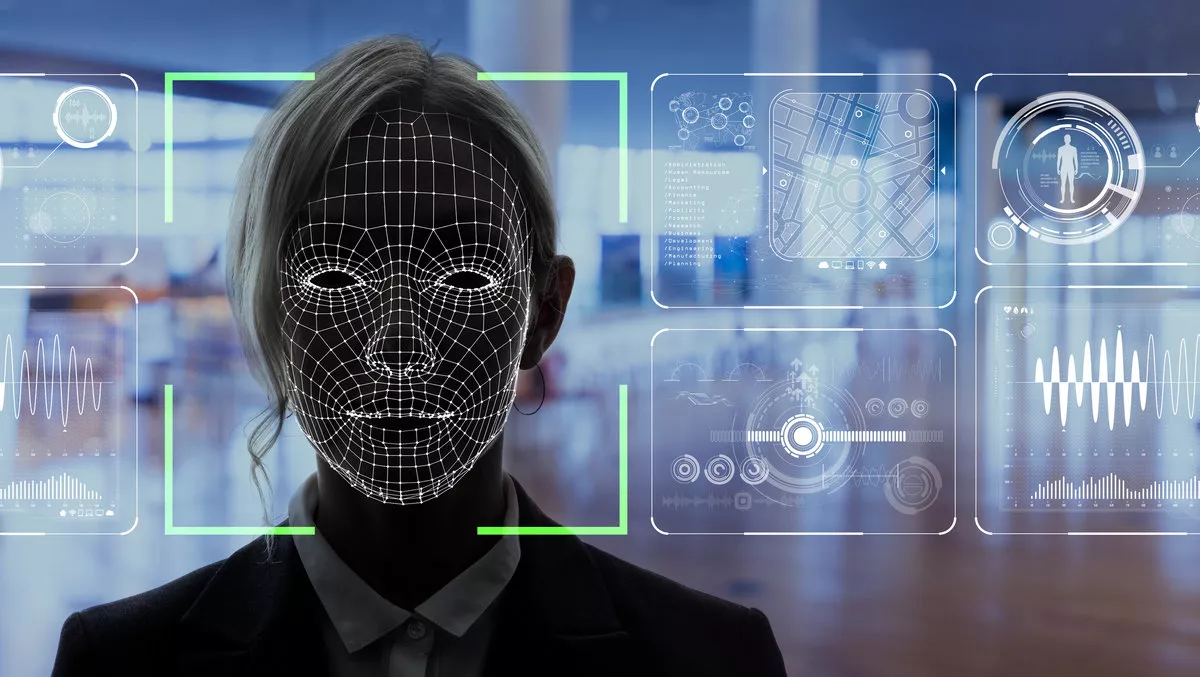

In the past couple of weeks, there have been high-profile examples of deepfakes, where deep learning, an advanced subset of AI, is used to manipulate videos to create fake videos that look authentic.

Most notably was the video of Facebook CEO Mark Zuckerberg declaring “whoever controls the data, controls the truth”.

This video was produced in response to a prior deep-fake video made of Nancy Pelosi, where the House Speaker was made to appear as if she was drunk, by having her speech slurred. Facebook refused to have the video removed.

To test Facebook's resolve not to remove posts despite the misinformation, the video of Zuckerberg was created where he appears to be talking about Facebook's plans of world domination.

In its most sophisticated form, deepfake refers to the AI rendering of fake videos which can't be detected by human analysis with the naked eye, as videos look very authentic.

This is a real source of concern, because if you can't distinguish between real videos and AI-generated ones, how do you know that anything you watch is real or fake?

As technology improves, this becomes a greater risk, because current discernable differentiations from the original footage will become further indistinguishable.

The methodology for creating deepfake videos varies considerably.

Some of them are trivial, such as the video of Nancy Pelosi, where the effect of her slurred speech was achieved just by slowing the video speed to 70%.

However, the most sophisticated deepfakes use deep learning.

For instance, one method involves an auto-encoder architecture that “compresses” an image to its basic features (the latent vector) and then “decompresses” it back using different basic features of the face you want to paste on the original image.

The threat potential

Deepfakes have the potential to be used for both good and for bad.

The increasing dependence of people in social and digital media as their only source of information about the “outside world” can cause the political effect of such videos to exponentially increase.

If you can generate a video of Barack Obama cursing Donald Trump, what stops you from generating a video of a politician cursing minority groups before elections?

Fake news is effective even after proven to be false.

Thus, the public stain might never be cleansed completely. But politicians aren't the only ones to be afraid: what happens if your ex-boyfriend or girlfriend decides to put your face in a revenge porn scene, as done to Gal Gadot?

The usage of deep learning is growing exponentially on day-to-day tasks and would be much harder to regulate.

Paving the way forward

The process of detecting between real and fake footage, requires analysis of the output footage where inconsistencies can be identified in the low-level features.

Deep learning classifiers fit this task, due to their ability to inspect the raw features of the image to detect tell-tale signs of fake images or videos.

For instance, in the autoencoder method mentioned above, the decompressed image is of poorer quality (e.g., some pixels lose their actual colour) and these differences create inconsistencies, that while invisible to the naked eye, can be detected by a deep learning classifier.

A research group from Adobe and UC Berkeley trained a convolutional neural network classifier to distinguish between real and fake images with 99% accuracy, as opposed to humans which achieved only 53% accuracy.

However, like in the cybersecurity domain, the first step towards the solution is the understanding of the problem and its ability to affect someone.

Once the risk of deepfakes is known, the same way the risk of malware is known, one can look for deep learning experts to assist in implementing solutions that would outperform human capability and solve this challenge.